HomeRobotic Database - Single Infrastructure | TERRINet

Address: Adenauerring 2,

76131 Karlsruhe,

Germany

Website

http://h2t.anthropomatik.kit.edu/english

Scientific Responsible

Tamim Asfour

About

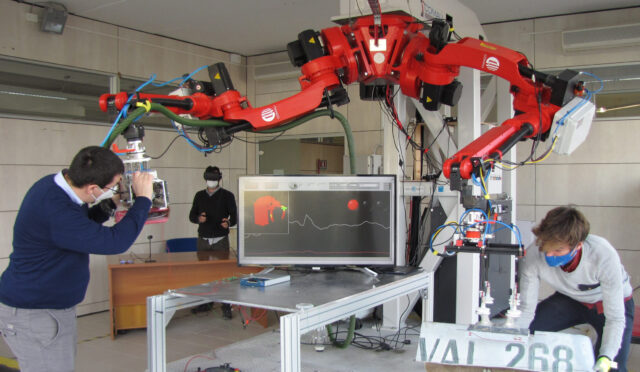

The infrastructure offered by the Chair of High Performance Humanoid Technologies consists of several state of the art humanoid robot systems and humanoid components used in research projects and education. The first major part of the infrastructure is the KIT robot kitchen environment, in which the robots ARMAR-IIIa and ARMAR-IIIb are operating. The installation provide unique opportunities to conduct research in the areas of grasping and dexterous manipulation in human-centered environment, visuo-haptic object exploration and learning from human observation and from experience. The second major part of the infrastructure is the KIT Motion Capture Studio, which provide a unique environment for capturing and analysis of human motion and thus support research in the area of learning from human observation and robot programming by demonstration. The users of the infrastructure have access to the expertise of the scientific staff of not only the Chair of High Performance Humanoid Technologies but also of other employees of the Institute of Anthropomatics and Robotics and the KIT Center Information – Systems – Technologies, which bundles interdisciplinary competences across KIT, in particular from informatics, economics, electrical and mechanical engineering, information technology, as well as social science. This is especially important considering the competences at KCIST, which range like machine intelligence, robotics, human-machine interfaces, algorithmics, software engineering, cyber security, cloud computing and scientific computing, secure communication systems, and big data technologies.

Presentation of platforms

Available platforms

ARMAR 6

ARMAR-6 is a collaborative humanoid robot assistant for industrial environments. Designed to recognize the need of help and to allow for an easy and safe human-robot interaction, the robot’s comprehensive sensor setup includes various camera systems, torque sensors and systems for speech recognition. The dual arm system combines human-like kinematics with a payload of 10 kg which allows for dexterous and high-performant dual arm manipulation. In combination with its telescopic torso joint and a pair of underactuated five-finger hands, ARMAR-6 is able to grasp objects on the floor as well as to work in a height of 240 cm. The mobile platform includes holonomic wheels, battery packs and four high-end PCs for autonomous on-board data processing.

The software architecture is implemented in ArmarX (https://armarx.humanoids.kit.edu). High-level functionality, like object localization, navigation, grasping and planning are already implemented and available.

ARMAR-III in a robot kitchen

The humanoid robot ARMAR-III has been designed to help in the Kitchen, e.g. bring objects from the fridge and fill the dishwasher. It has a total of 43 DoF. A mobile platform equipped with three laser scanners allows the robot to navigate the kitchen environment. The arms have 7 DoF each with 8 DoF five fingered hands. A force-torque sensor is available in each wrist. ARMAR-III uses the Karlsruhe Humanoid Head with 7 DoF. For vision, four digital cameras are integrated into the head. Each eye has a wide-angle and narrow-angle camera for peripheral and foveal vision, respectively. There are four PCs inside the mobile base, which run Linux as their operating system. The robot software was originally written in MCA but can also be controlled via the newer ArmarX framework (https://armarx.humanoids.kit.edu). High-level functionality, like object localization, navigation, grasping and planning are already implemented and available.

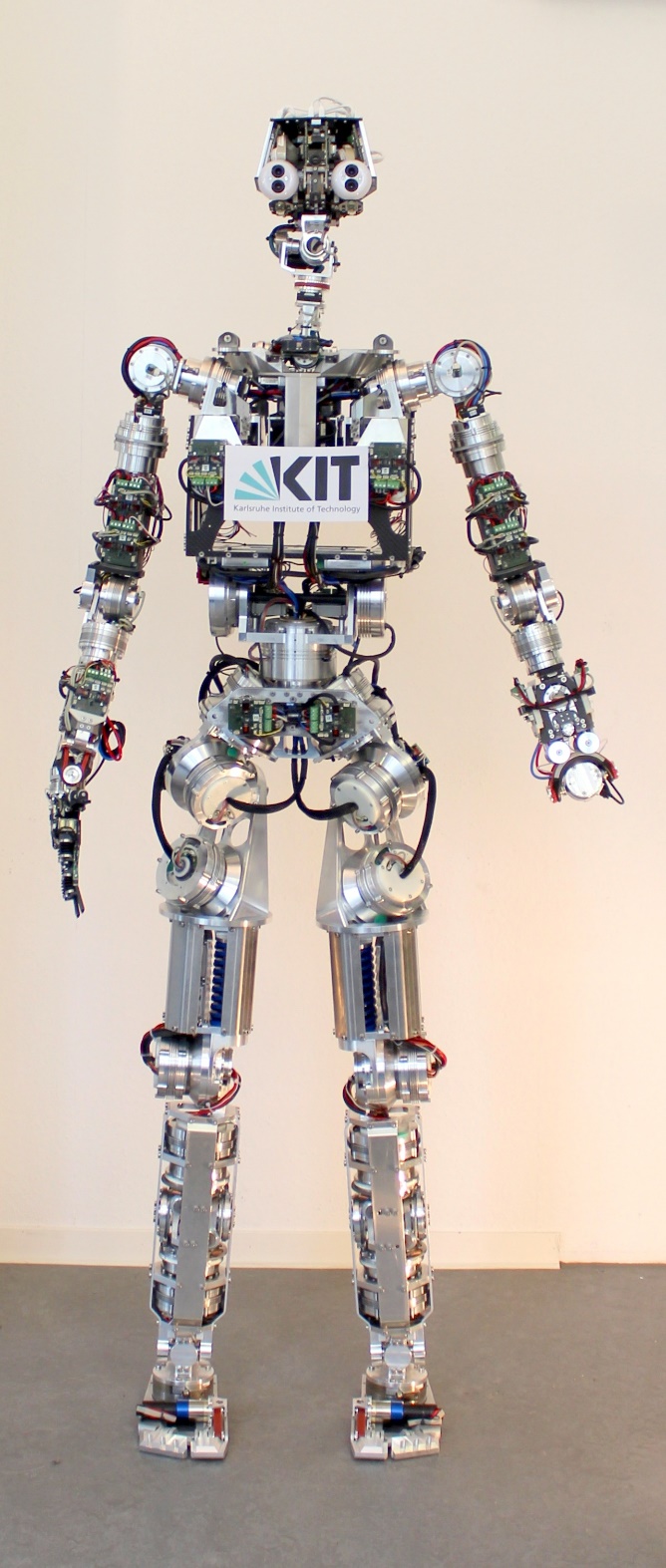

ARMAR-4

ARMAR-4 is a full-body humanoid robot with torque control capabilities in its arm, leg and torso joints. It has 63 active degrees of freedom with 63 actuators overall, including feet, neck, hands and eyes. It features more than 200 individual sensors for position, temperature and torque measurement, 76 microcontrollers for low-level data processing and 3 on-board PCs for perception, high-level control and real-time functionalities. The robot stands 170 cm tall and weighs 70 kg. Each leg has six degrees of freedom, mimicking the flexibility and range of motion of the human leg. For maximum range of motion and dexterity, each arm has eight degrees of freedom. The kinematic similarity to the human body facilitates the mapping and execution of human motions on the robot. The four end-effectors (hands and feet) are equipped with sensitive 6D Force/Torque sensors to accurately capture physical interaction forces and moments between the robot and its environment. The robot’s two eyes are each equipped with two cameras for wide and narrow angle vision. The three control PCs (two in the torso, one in the head) run Ubuntu 14.04 and control the robot via the ArmarX software framework (https://armarx.humanoids.kit.edu), wherein high-level functionalities like object localization, grasping and planning are already implemented and available.

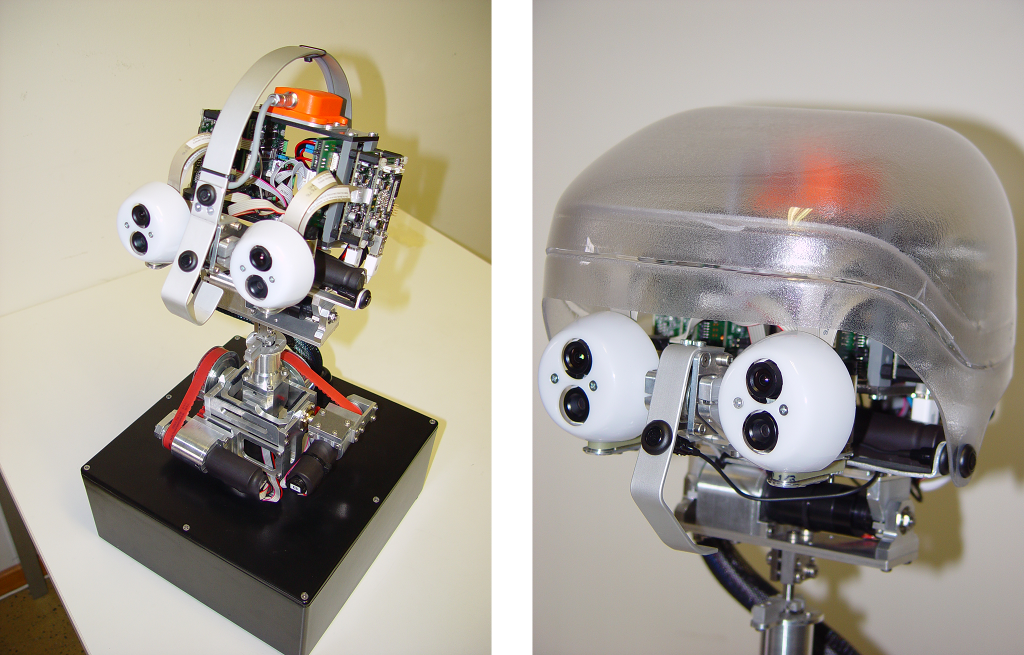

The Karlsruhe Humanoid Head

The Karlsruhe humanoid head was consistently used in ARMAR-IIIa and ARMAR-IIIb. It is a stand-alone robot head for studying various visual perception tasks in the context of object recognition and human-robot interaction. The active stereo head has a total number of 7 DOFs (4 in the neck and 3 in the eyes), six microphones and a 6D inertial sensor. Each eye is equipped with two digital color cameras, one with a wide-angle lens for peripheral vision and one with a narrow-angle lens for foveal vision to allow simple visuo-motor behaviors. The software was originally written in MCA but can also be controlled via the robot development environment ArmarX (https://armarx.humanoids.kit.edu).

Human Motion Analysis with Vicon

The human motion capture studio provides a unique facility for capturing and analyzing human motion as well as for the mapping to humanoid robots. The studio is equipped with 14 Vicon MX cameras (1 megapixel resolution and 250 fps), microphone array and several kinect cameras. Several tools for motion post-processing of recorded data, normalization, synchronization of different sensor modalities, visualization exist. In addition, a reference model of the human body (The Master Motor Map, MMM) and a standardized marker set allow unifying representations of captured human motion, and the transfer of subject-specific motions to robots with different embodiments. The motion data in the database considers human as well as object motions. The raw motion data entries are enriched with additional descriptions and labels. Beside the captured motion in its raw format (e.g., marker motions), information about the subject anthropometric measurements and the setup of the scene including environmental elements and objects are provided. The motions are annotated with motion description tags that allow efficient search for certain motion types through structured queries.

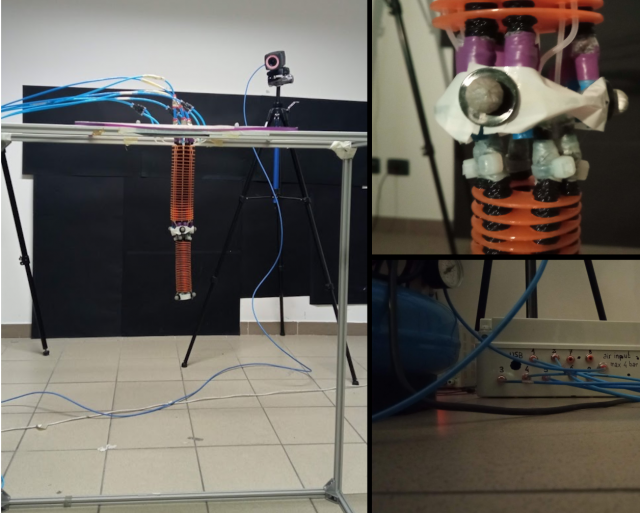

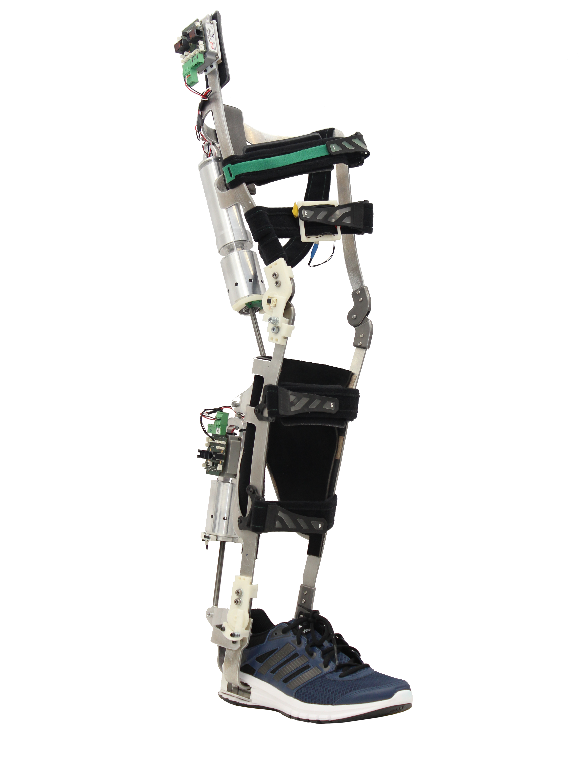

KIT-EXO-1

The exoskeleton KIT-EXO-1 was developed with the aim to augment human capabilities or to use it in rehabilitation applications. It has two active DOF at the knee and ankle joint to support flexion/extension movement. The linear actuators consist of brushless DC-motors, coupled to planetary roller screws and an optional serial spring. They are equipped with absolute and relative position encoders as well as a force sensor and can be controlled via the ArmarX software framework (https://armarx.humanoids.kit.edu). Eight additional force sensors, which are distributed on the exoskeleton measure interaction forces between user and exoskeleton at thigh, shank and foot and can be used for research on intuitive exoskeleton control or to assess the kinematic compatibility of new joint mechanisms.

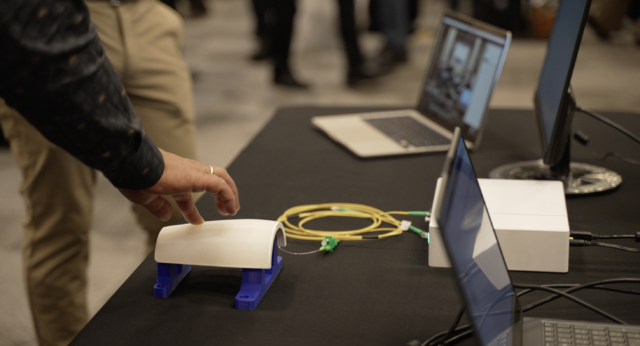

KIT Prosthetic Hand

A five-finger 3D printed hand prosthesis with an underactuated mechanism, sensors and embedded control system. It has an integrated RGB camera in the base of the palm and a colour display in the back of the hand. All functional components are integrated into the hand, dimensioned according to a 50th percentile male human hand. Accessible via a simple communication interface (serial interface directly or via Bluetooth) or controllable via buttons. Camera and display allow for studies on vision-based semi-autonomous grasping and user feedback in prosthetics. As a stand-alone device the hand allows easy usage in different environments and settings.

NAO Robots

NAO is an autonomous, programmable humanoid robot developed by Adelbaran Robotics in 2006 (now SoftBankRobotics [1]). The robot is commonly used for research and education purposes. Mostly known is this robot due to the participation in the RoboCup Standard Platform Soccer League [2]. In this league the robots play fully autonomously, make decisions independently from each other but can also cooperate with each other. NAO can be programmed graphically via the programm Choregraphe, which is commonly used by young students with no programming experience. The robot can also be programmed in python and C++, which allow a wide range of complexe tasks to be fulfilled . The robot can also be used to show mathematical modelling on humanoids robots to solve complexe tasks.