Past Trans-national Accesses

Universidad de Sevilla (USE)

Robotics, Vision and Control Group

MBZIRC Hexarotor

MSFOC-ESACMS - Multi-Source Sensor Fusion for Object Classification and Enhanced Situational Awareness within Cooperative Multi-Robot Systems

Qingqing Li

In urban and other dynamic environments, it is essential to be able to understand what is happening around and predict potentially dangerous situations for autonomous cars. This includes predicting pedestrian movement (e.g., they might cross the road unexpectedly), and take extra safety measures in scenarios where construction or reparation work is undergoing. This research focuses on enhancing an autonomous vehicle’s understanding of its environment by means of cooperation with other agents operating in the same environment. Through the fusion of information from different sensors placed in different devices and distributed machine learning algorithms to improve the autonomous cars safety and stability.

The proposed research is multidisciplinary. It will leverage recent advances in three-dimensional convolutional neural networks, sensor fusion, new communication architectures and the fog/edge computing paradigm. The project will affect how autonomous vehicles, and self-driving cars, in particular, understand and interact with each other and their environment.

University of the West of England (UWE Bristol)

Robotics Innovation Facility

Universal Robots UR5

FiGito - Peal of seedlings, using the shortest possible time without significantly altering the initial characteristics of the seedling, using the UR5 robotic arm.

Natali Fiorella Alcorta Santisteban

General objective:

- Using the UR5 robotic arm, perform the peal of seedlings using the shortest

possible time without significantly altering the initial characteristics of the

seedling.

Specific objectives:

- Meet and configure the UR5 robotic arm.

- Develop the control algorithms of the UR5 robotic arm, aimed at experimental tests of seedling peal.

- Perform several experimental tests of seedling peal and collect the data obtained in each of these trials.

- Analysis and processing of the data obtained in the experimental tests.

The university where I work is in the process of development and we do not have laboratories as advanced as laboratories in Europe, however, I hope we can imitate some protocols and ways of working, so that we can apply them in northern Peru. We have similar projects that will be developed in the coming years with robotic claws coupled to Cartesian robots, aimed at Peruvian agribusiness. The UR5 robotic arm is a very advanced object manipulation platform, which will serve as a basis to let us know how to manipulate the seedlings by means of the robot\'s claw and because it can replicate the same movement several times with spectacular precision and in less time, which allows to reduce the seal time of the seedlings.

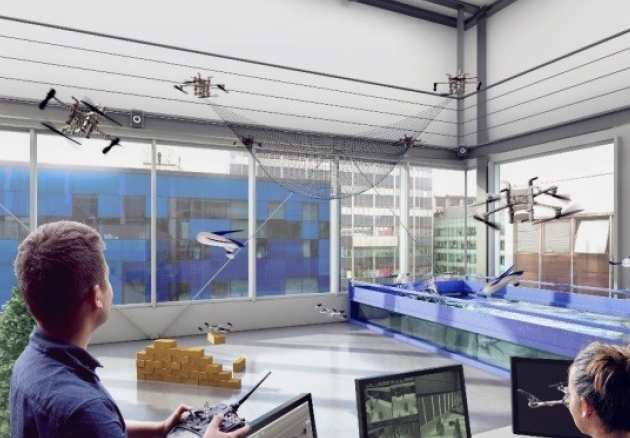

École Polytechnique Fédérale de Lausanne (EPFL)

Laboratory of Intelligent Systems

Motion capture arena

Index-Free Formation Control of multiple UAVs with Minimal Communication

Jorge Peña Queralta

Pattern formation algorithms for swarms of robots can find applications in many fields from surveillance and monitoring to rescue missions in post-disaster scenarios. Existing algorithms that enable complex configurations usually require a centralized control, a communication protocol among the swarm in order to achieve consensus, or predefined instructions for individual agents. Nonetheless, trivial shapes such as flocks can be accomplished with low sensing and interaction requirements. We propose a pattern formation algorithm that enables a variety of shape configurations with a distributed, communication-free and index-free implementation with collision avoidance. Our algorithm is based on a formation definition that does not require indexing of the agents. The aim of this experiment is to test in a real scenario formation control algorithms that have been developed theoretically and tested through computer simulations or basic experiments with ground robots.

This experiments are part of a project to develop a swarm of drones capable of helping in search and rescue (SAR) operations by finding people in large water bodies (sea or lakes). Research outcomes from this project will directly contribute toward next-generation autonomous rescue operations, which have a big potential in saving human lives.

Imperial College London (IMPERIAL)

The Hamlyn Centre

Robotic Fast Prototyping Platform

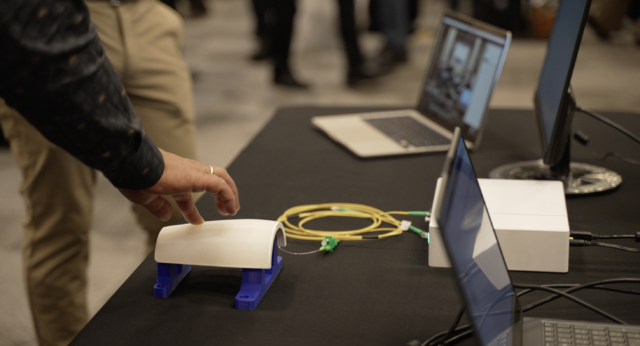

RASIS - Precision characterization of a micro-surgery robotic platform.

Dr.-Ing. M. Ali Nasseri, Krassimir Ovcharov

Characterization of positioning accuracy both in component space and Cartesian space is playing an important role in validating the precision of micro manipulation systems. Micro robots, designed for medical and surgical applications, are one of the most critical micromanipulation systems with specific precision requirements. Since 2011 we are developing a hybrid parallel-serial micromanipulation system for assisting surgeons to perform micron scale operations such as retinal surgery in ophthalmology. Now there is a need to perform precision assessment. Within this project we are planning to perform this assessment in two steps both for positions as well as forces.

The result of this experiments will be used for design and development of surgical robots for precision operations. Even though, the current application is in ophthalmology, the results are not limited to visual science but it can be also generalized to other medical and surgical fields where micron scale manipulation is needed. Clinical compatibility will be a main part of this work.

Universidad de Sevilla (USE)

Robotics, Vision and Control Group

AMUSE

VILO - visual Lidar odometry based on monocular camera

Sherif A.S. Mohamed

•Implement a visual odometry module based on images capture by a single camera to avoid baseline problem in stereo cameras.

•Implement an algorithm to build depth-map based on input from LIDAR.

•Implement a tightly coupled-fusion algorithm to fuse the two modules to achieve more robustness and better accuracy.

I am working on a manuscript that expected to be publish to a conference paper about the results I’ve collected from this visit.

Universitat Politècnica de Catalunya (UPC)

IRI

Barcelona Robot Lab

SAFEROBOTS - Assessing Robotic Safety

Raul Barbosa (University of Coimbra)

Robots are being put to use in scenarios where lives or very high economic values are at stake. Thus, robotic safety and dependability must be carefully addressed. The goal of this project is to assess the behaviour of robots containing defects in the code. The increasing size and complexity of robotic software lead to a proliferation of software defects, informally known as bugs, present is most software. While many software bugs create no safety risks, a subset of those faults can have unforeseeable consequences.

In some circumstances, robots fulfil safety-critical functions where failures lead to safety risks. Even a simple urban robot may cause risky situations by halting on a busy road. Therefore, evaluating failure modes and effects due to software faults is essential. To this end, the ucXception fault injector emulates realistic software faults in the source code, aiming to observe the effects and design appropriate fault tolerance mechanisms.

The project showed that software fault injection can be used to identify the failures modes of robots. A robot was programmed to perform a navigation task, followed by a detection task and finally moving toward the detected object. The ucXception tool was used to insert realistic software faults in the source code of relevant modules (e.g., navigation, lidar detection). A frequent failure mode consists in the robot halting at some point during the tasks. While this may not be problematic if the robot has a safe-stop, one can foresee scenarios such as an urban robot halting in the middle of a busy intersection. Another, less frequent failure mode consists in the robot performing a task erroneously. A few experiments resulted in incorrect orientation and navigation, potentially leading to undesired situations in a real deployment. This clearly calls for strict attention to safety, therefore pushing the need for dependable robotics.

Universidad de Sevilla (USE)

Robotics, Vision and Control Group

Bobcat

FPGA SLAM 2D Lidar - The event camera and 3D lidar based LOAM-BOR SLAM sensor fusion system

Yuhong Fu

We make a system to collect data from a 16-lines 3D lidar with the event camera. In order to make them work together, we should build a special system.

It would greatly increase the accuracy also with the enormous power consumption. We need further research.

Universitat Politècnica de Catalunya (UPC)

IRI

Barcelona Robot Lab

ID6WD - Test of a robot platform for last mile logistics

IDMIND

IDMind intends to perform different experiments in “Barcelona Robot Lab” which will allow to evaluate the performance of a recent development of the company, a six wheeled robot platform, guided by the following objectives:

- evaluation of the platform kinematics in different terrains and routes

- overall tests of the platform safety and robustness

- tests on the power autonomy

- tests of sensors behavior in outdoor environment

- preliminary tests of autonomous navigation

IDMind is a producer of custom-made robot solutions. The company is developing this robot platform to operate in an outdoor urban like environments. The Barcelona Robot Lab offers an outdoor pedestrian area of 10.000sqm in the UPC nord campus, provided with fixed cameras, wifi, 3G/4G and partial gps coverage, with presence of buildings, open and covered areas, ramps and some vegetation. This is a relevant scenario to deploy robots in a real and controlled urban scenario.

With this development the company intends to complement its product offer with an outdoor robot platform for urban like environments.

School of Advanced Studies Sant'Anna (SSSA)

The BioRobotics Institute

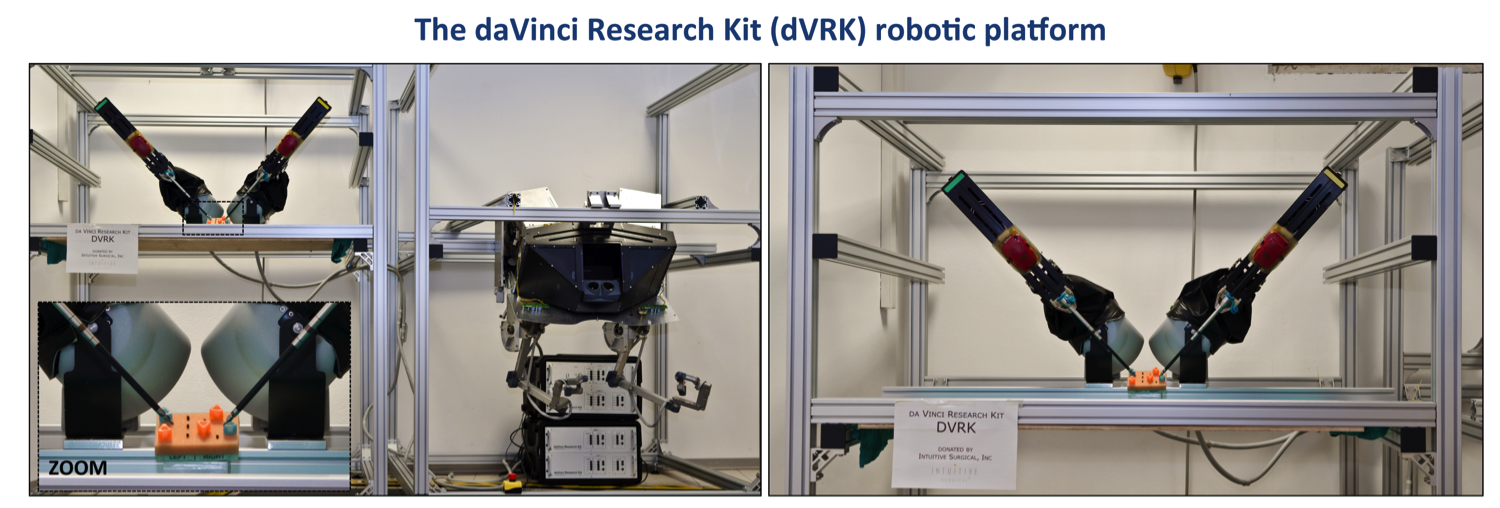

Da Vinci Research Kit (DVRK)

ROSMA - Robotics surgical maneuvers data acquisition

Irene Rivas Blanco

The global objective of this proposal is to build a large dataset of robotic surgical maneuvers using the da Vinci Research Kit (dVRK) platform. One of the trending research topics in the field of surgical robotics is the design of collaborative strategies so that robots can aid surgeons performing certain actions with autonomy. Automating parts of a surgery has many advantages such as increased precision and accuracy, consistency in treatments, and greater dexterity and access to tissues. However, the adoption of autonomy in the surgical domain is still in an early stage. Thus, the dataset built during this project would be helpful to research on the field of autonomous robotic surgery. In particular, it will be used to advance in the topic of collaborative surgical robots, exploring the use of Deep Learning techniques to perform autonomous auxiliary tasks in laparoscopic procedures.

The da Vinci surgical robot is the main reference in the field of surgical robotics, thus most researches addressing advances in this field use the research version of this platform, the dVRK, to perform their experiments. Building a database of robotic surgical maneuvers using this platform will give the user the possibility of performing several investigations derived from the data analysis, ranging from maneuvers segmentation to autonomous task planning.

Moreover, the Department to which the applicant belongs, has recently acquired a high-performance computer for applications in the field of cognitive robotics. Thus, the surgical robotics database will also be accessible for members of this department in order to explore Deep Learning techniques for tasks and/or objects recognition and other applications that may be of the interest of the department’s members. In addition, it is expected to use this database to carry out several end-of-degree projects with engineering students.

École Polytechnique Fédérale de Lausanne (EPFL)

Laboratory of Intelligent Systems

Motion capture arena

OPTIM-TUNE - In-flight automatic optimal tuning of UAV controllers for robust operation

Dariusz Horla, Wojciech Giernacki

The proposed research concentrates on developing a simple and applicable method to tune controllers, e.g., PD, widely used both in commercial and open-source flight controllers (like Pixhawk, Naze32, CC3D Open Pilot) in a matter of minutes. However, due to a variety of UAV applications, it is important to perform tuning in flight conditions, without dynamics modeling of the drone, using iterative approach. Literature studies reveal no results for iterative controller autotuning performable as above, during flight. The OPTIM-TUNE will perform automated tuning of controllers, with special view to UAV control robustness against changes in propellers’ aerodynamic efficiency. OPTIM-TUNE could also be used to find the best model parameters used in the approaches adopting partial knowledge about the UAV dynamics.

The proposed research is a natural extension of the prior common actions, making it possible to verify the theses previously presented, and develop new software tools for optimal auto-tuning of controllers with special attention paid to robust, fault-tolerant, control, or different degree of effectiveness of the propellers (Tilt-hex). The robotic laboratory, to which the TNA is addressed released recent publications related to such problems, as the one concerning Tele-MAGMaS: Aerial-Ground Co-manipulator System, or about drag-optimal allocation method for variable-pitch propellers.

Karlsruhe Institute of Technology (KIT)

Institute of Anthropomatics and Robotics - High Performance Humanoid Technologies Lab (IAR H2T)

Human Motion Analysis with Vicon

HuRoMoD - Human and humanoid robots motion design and control using Hierarchical Systems technology

Kanstantsin Miatliuk

Among the project objectives is the creation of innovative formal and computer means for human and humanoid robot motion design using hierarchical systems (HS) technology. One of the objectives is the implementation of the HS design method in the design and control tasks of human and humanoid robot motion, and verification of the correspondence of HS method and computer models developed to the real processes of human and humanoid robot motions, and possibility of prediction and coordination (design and control) of human motion using HS technology. For this aim, correspondent experimental study using VICON platform should be performed, and HS computer model and VICON system Nexus 2.7 and MMM software should be integrated and incorporated. It will extend HS method functional abilities and bring new means of human and humanoid robots motion design and additional possibilities of the developed HS technology implementation. It will make the proposed technology coordinated with existent models utilized and program means of the modern VICON system used in human and humanoid robot motion analysis and design, and rise the efficiency of solving of existent and new motion design and control problems.

TNA gave an opportunity to perform an experimental work on capturing and analyzing human motion in modern laboratory environment using glove with sensors and Wacom Pro graphic tablet, up-to-date VICON system and Nexus 2.7 software. Obtained experimental results will allow verification of functional abilities of HS method and creation of an innovative formal and computer means for human and humanoid robot motion design and control. Installing of a strong cooperation with colleagues from Institute for Anthropometrics and Robotics – H2T, Karlsruher Institut fur Technologie (KIT) is also an impact of the project. The obtained experience in joint work in H2T, (KIT) will contribute to the future research and allow solving new actual problems of human and humanoid robots motion design and control by using author’s innovative HS technology developed as well as modern platforms and up-to-date software of H2T, (KIT).

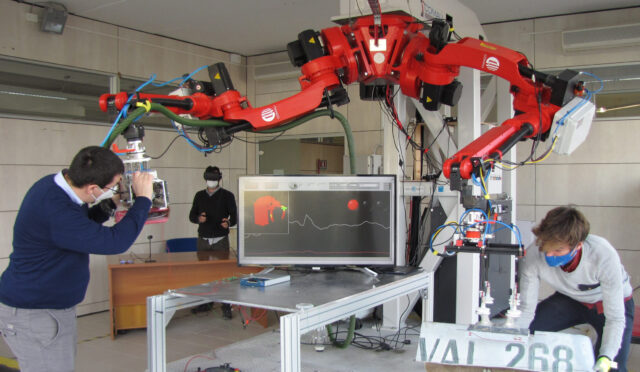

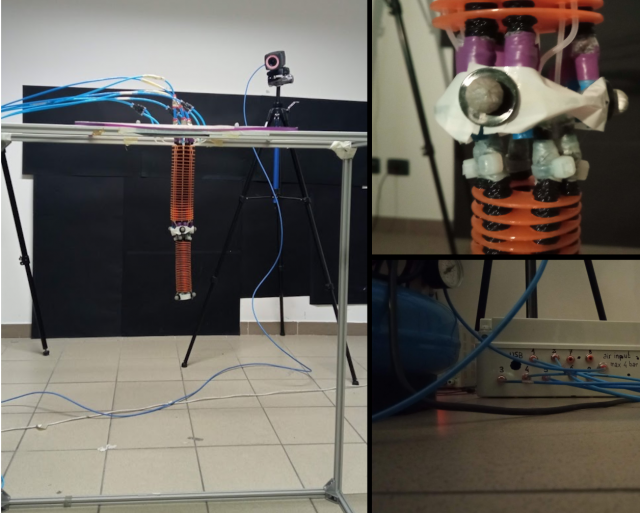

School of Advanced Studies Sant'Anna (SSSA)

The BioRobotics Institute

STIFF-FLOP soft manipulator

The STIFF-FLOP Algorithm Exchange - Testing of Position Controller of a Novel Growing Soft Robot

Burcu Seyido?lu

The proposed study was to implement the control algorithm of a novel growing soft robot to the STIFF-FLOP soft manipulator. The project objectives are listed below:

• Enhancing the end-effector’s position accuracy by using novel growing soft robot’s algorithm which includes deformation correction

• The consideration of external loads applied to the end-effector, which affect tip positioning.

• Increasing the overall performance of the tip positioning by exchanging the algorithms.

• Making the overall position information of the end-effector more precise, accurate, and robust.

There are three possible impacts of the proposed study. Firstly, the proposed development in the algorithm and a successful algorithm exchange can increase the tip positioning of the STIFF-FLOP and thus improve its applications in the new areas of use. Secondly, it can also affect the future applications of the novel growing soft robot developed by home institution, by increasing its accuracy and robustness, and thus increase its use especially in the surgical robotic applications. Finally, in the long-term, it can draw attention to this field and lead to new technological improvements and solutions.

Universidad de Sevilla (USE)

Robotics, Vision and Control Group

RX-90

RoboView&Mach - Intelligent system for machining of unknown shape using industrial robot RX90 with vision system

Tadeusz Mikolajczyk

The aim of the project was to determine the possibilities and conditions for the transfer of the own concept of intelligent machining system using industrial robot equipped in tool for: surface shape recognition, machining based on view of surface, on line system for recognition of surface quality on the RX90 robot available as part of the TERRINet platform at the University of Seville. Because this robot had an open control system developed for the Linux platform using C++, it was possible to use elements of the proposed intelligent system of machining with surface shape recognition. After analyzing the possibilities of the RX90 robot, which was equipped with a USB camera mounted on robots head, the main objective was to try to automatically prepare of control file for RX90 robot on the basis of the indicated using USB camera flat surface view for image processing based on own special RoboView.

Because the developed intelligent machining system software was implemented in VB6, it was possible to develop an off-line system during a short stay. RoboView software was modified and directly generated based on image analysis X, Y, Z parameters code of robots trajectory and A, B, C values ?? of head rotation added from the keyboard. As result of the image analysis, the .txt file of code for controlling the RX90 robot was obtained. Tests carried out on the use of automatically developed control files using the RX90 robot, confirmed the correctness of the development, the robot movements were consistent with the assumptions. This creates conditions for implementing the VB6 source code into the C++ source code for on-line programming the RX90 robot for task of machining with automation recognition of surface view. This opens the way to implement other aspects of intelligent machining system using a robot equipped with a tool.

Centre national de la recherche scientifique (CNRS)

The Department of Robotics of LAAS

Motion Capture Facilities

SMILE - Structure-level Multi-sensor Indoor Localization Experiment

Piotr Skrzypczynski, Jan Wietrzykowski

The SMILE project aimed at producing a new dataset that facilitates development of indoor localization methods through machine learning and evaluation of such methods. The core idea is that availability of multisensor and accurate data describing highly-structured indoor scenes would allow us to develop new, learning-based methods for scene description with structured geometric features, such as planar segments and edges. The scene description should support the task of agent localization. Obtaining such a description is challenging for passive cameras often applied in personal indoor localization or augmented reality setups. To address the difficulties, we automatically supervise the learning of scene representation with ground truth trajectories from a motion capture system, and depth data obtained from a 3-D laser scanner. Moreover, we embed in the dataset additional localization cues coming from Inertial Measurements Unit (IMU). The facility available at LAAS-CNRS within the TERRINet TNA program made it possible to collect the necessary data.

The result of the SMILE experiment is a large dataset of indoor data that should be useful in our research on data-driven methods for structure-level scene description. The fact that the main outcome of the experiment is a dataset, not direct scientific results, allows us to re-use the gained data many times for different, localization-related research. Moreover, within the TERRINet TNA program, we have gained experience on collecting large datasets with data analysis and generation of optimal experiment scenarios. Our long-term goal is to improve the PlaneLoc system (Wietrzykowski & Skrzypczynski, 2019) by enabling operation with passive vision systems which will make it much more practical for personal localization, large-scale augmented reality applications, and service robots. A proper dataset for learning and evaluation plays an essential role in achieving this goal, and participation in the TERRINet made these plans closer to reality.

School of Advanced Studies Sant'Anna (SSSA)

The BioRobotics Institute

COMAU Dual arm robot

SaITA - DAR - Associate professor

Marek Vagaš

Project objectives lie mainly at the developing of skilled and innovative training approach in dual arm robotics - and also for automation as a whole to increase SMEs growth, a better employability, and promotion of the entrepreneurship and acquiring of student´s rare experiences. Orientation at selected priorities is based on context of education 4.0 and relevant actual needs from entrepreneurs, who want to develop their potential SMEs employees as well as from students, who want to improve their qualification. Current graduates are insufficiently prepared for these new robotic challenges, say a lot of skilled robotic developers and integrators.

Implementation of dual arm robots into the automated workplaces for their use at high-performance industrial environments still continuously grow up, and make it still more available for innovative customized solutions. Also, these reasons lead us to the focus and closer look at COMAU robotic system as flexible system and customized motion algorithms. Project will increase level of education in the field of dual arm robotics (at one side) together with sharing and verifying of obtained skills between other professionals and SME employees that are a key for realization of advanced automated solutions (at another side). Project results lays (inter alia) in obtaining of relevant knowledge level at selected COMAU robotic system, skills with its operation and expecting of familiarity and principles for implementation into the automated workplaces that can be permanently used during cooperation with companies and teaching processes itself.

Centre national de la recherche scientifique (CNRS)

The Department of Robotics of LAAS

PAL Robotics Pyrène

PyreneBox - Lecturer

Dimitrios Kanoulas

This project aims at using a humanoid robot (PAL Robotics Pyrene) for powerful manipulation. In particular, the project will lie into the area of visual-based perception for identifying boxes to be grasped and placed in a different position in the environment. The boxes will be heavy enough to require whole-body grasping, i.e. grasping that requires the use of the arm and the upper-body of the humanoid robot. The focus will be given to identify and localize a box in the environment, to find grasp contact points for whole-body encapsulated grasping, which may also require a visual reconstruction method with the robot moving around the target box object. Moreover, special care will be given to understanding the weight of the box while trying to grasp it, by using the wrist force-torque sensors of the robot. This will allow decisions on whether the box object is hand or whole-body graspable.

The Applicant Researcher (AR) will expand his knowledge on legged robots, as well as his research background and profile for his future academic career. Having the opportunity to work on one of the few humanoid robots in Europe (PAL Robotics Pyrene), provides an extra benefit in this attempt. It is also important that the working experience on this new to the AR robot (Pyrene) and the potential transfer-of-knowledge that can be adapted to the AR research group (mobile manipulation) makes the project more interesting and useful. This will attract not only PhD students, but also MSc/BSc students to work on similar term projects. Last but not least, several software packages and novel research will be produced and released for the robotics research community towards loco-manipulation tasks for full-size humanoid robots.

Technical University Munich (TUM)

Robotics and Embedded Systems

Electric Car Testbed

EV-DSIM - Driving simulator enhancement based on the measurements offered by the electric car testbed

Csaba Antonya

The aim of this research project is to connect the Electric Car Testbed of the Technical University of Munich (TUM) to an existing driving simulator of the Transilvania University of Brasov (UTBv). The purpose of this connection is to test the real-time driving of an electric car based on measured data offered by the testbed. The benefit of the connection is that the virtual car of the driving simulator is enhanced by measured and validated data. This connection can be used to simulate driving conditions, evaluate the performance of wheel drive control algorithms in scenarios when the user can be aware of the dynamic feedback (movement) of the car. Research questions are related to the development and validation of the dynamic model of the vehicle based on the measurement offered from the testbed of the electric car, and regarding the data transfer to and from the testbed and the simulator.

Testing of virtual cars in multi-modal virtual environment is an important step in the validation process of new concepts and technologies. The measured data obtained from the Electric Car Testbed of the TUM is going to validate the dynamic model of the vehicle of the existing driving simulator of the UTBv. The connection between the TUM testbed and the UTBv simulator will lead to the possibility to real-time testing and driving of virtual cars based on measured data offered by the TUM testbed. The new UTBv driving simulators will provide a virtual driving environment for this Roding Roadster electric car, resembling real driving conditions. This environment is opening the doors for powerful virtual prototyping and testing tools for both new electric car configurations and analyzing driver behavior in a safe environment.

École Polytechnique Fédérale de Lausanne (EPFL)

Laboratory of Intelligent Systems

Motion capture arena

Coordinated movement of a swarm of nonholonomic wheeled robots modeled as virtual visco-elastic body

Jakub Wiech

The project objective is verification of the proposed control algorithms on 5 nonholonomic mobile robots using OptiTrack motion capture system. Conducted experiment would allow adjustment of the control parameters to achieve better performance of the swarm movement. Said control algorithms are: virtual spring damper mesh control, distributed PD control, artificial potential control, worm creep algorithm . All algorithms would in theory allow for swarm movement in environment with and without obstacles.

The project allowed for verification of 2 control algorithms on 5 nonholonomic mobile robots using OptiTrack motion capture system : virtual spring damper mesh control with and without obsticles and swarm selforganization using worm creep algorithm. Conducted experiment allowed for adjustment of the control parameters to achieve better performance of the swarm movement.

École Polytechnique Fédérale de Lausanne (EPFL)

BioRobotics Lab

Swimming Pool and Flow tank

Sync&Swim - doctor

Johann Herault

Aquatic animals as eels, perform undulatory swimming, consisting of accelerating the fluid to create thrust. The gaits are generated by the coordination/synchronization of central pattern generators (CPG) producing a rhythmic pattern. If short-range coupling between CPGs can be provided by local connections, the origin of long-range interactions remains an open question. Could long-range coupling between CPGs be provided by hydrodynamic sensory feedback?

The aim of the experiment is to investigate the efficiency of feedback based on hydrodynamics pressure to synchronize a network of CPGs. These oscillators will control the servomotors of a 9-links swimming robot. The runs will be performed thanks to a robot designed at EPFL. These results will be then compared to our theoretical models based on linear analysis of the body response and synchronization theory. Finally, we aim to determine the necessary and sufficient conditions to synchronize the oscillators.

We expect impacts on different fields of research

-

- Biology: the results will show how exteroceptive sensory feedback influences the limb coordination, and what is the role of peripheral control relative to the central neural control.

-

- Non-linear sciences: We seek to understand theoretically and experimentally the relevant parameters determining the condition of the network synchronizability, a long-standing problem in non-linear sciences.

-

- Robotics: We aim to design innovative bio-inspired command laws for robots.

University of Twente (UT)

Department of Robotics

LOPES

FlowWalk - Velocity-field-based Assist As Needed control in LOPES II

Hocoma AG (Jan Veneman, Technical Project Lead)

The goal of this experiment was to evaluate a recently published control scheme that was developed for interactive “assist-as-needed” control in an autonomous exoskeleton, in the context of a treadmill based (stationary, non-autonomous) gait trainer (LOPES II).

The principles of this controller are explained in a publication, and the Matlab code was kindly provided by the authors for further evaluation

In the experiment the goal was to check whether the algorithm would be perceived as natural, intuitive support to a healthy gait pattern, and would increase support when the healthy test subject would reduce their effort.

-

- Martínez, A., Lawson, B., Durrough, C., & Goldfarb, M. (2018). A Velocity-FieldBased Controller for Assisting Leg Movement During Walking With a Bilateral Hip and Knee Lower Limb Exoskeleton. IEEE Transactions on Robotics.

The indicated control scheme could potentially be interesting to be implemented on the Hocoma Lokomat®, however substantial hardware changes need to be implemented on this device in order to facilitate this. To evaluate whether the flow field-based control principle is simple to implement and feels intuitive to an (initially) healthy user, when implemented on a stationary gait trainer (like LOPES II or Hocoma Lokomat®) instead of on an autonomous exoskeleton (like Parker Indego, used in the study), would be informative to Hocoma in order to decide whether it is worth to make hardware improvements to the Lokomat® to facilitate such a type of control. Once implemented in a commercial device, this work could facilitate larger clinical studies into clinical effectiveness of such Assist As Needed training approaches to recovery of gait in neurological patients. In general, it facilitates further improvement of gait rehabilitation using robotic devices.

École Polytechnique Fédérale de Lausanne (EPFL)

Laboratory of Intelligent Systems

eBee drone

LIT - Litter Identification and Tracking

Olaya Álvarez-Tuñón

The overall objective of the project would be to provide a platform for the identification and tracking of litter in the nature with the use of the eBee sensors. This will be achieved through the following intermediate objectives:

• Generation of a database: deployment of the robot on the considered areas of interest.

• Object detection: detection and identification of objects in the given area. Retrieval of shape and (GPS) location of the objects detected as litter.

• Shape measurement of the detected objects.

• Computation of high concentration areas.

The areas will be selected after performing a study of the best possible candidates (prior to the stay in the university): clear areas (not covered by vegetation), areas frequently travelled by a significant volume of people, areas of interest due to the environmental impact of litter on them (close to rivers, protected areas, etc.), and so on.

It is expected to make academic publications from the work developed in this project. The collaboration between Lausanne University and University Carlos III of Madrid could be very enriching, as they can both contribute and collaborate with their experience and workflows. Finally, this collaboration will stablish

a network between both universities, which would allow us to develop future works together, and keep working on the project even after the stay.

University of the West of England (UWE Bristol)

Robotics Innovation Facility

Universal Robots UR5

SSSA - The development of multi cleaning tasks in simulation and real environment using meta and multi-task reinforcement learning

Jaeseok Kim

The objective of this project is to develop algorithms that generalize cleaning manipulation tasks in a simulated and actual environment. Based on state of the art, the meta and multi-task reinforcement learning (RL) algorithms are used to learn the tasks with a few datasets and shared structure. I organized this research in simulated and actual robot manipulation tasks as a daily cleaning table with a Universal robot 5 and Robotiq gripper.

The main impact will be two-fold: (i) scientific and (ii) career development

Scientific impact:

(i) Implementation, testing, and validation of research ideas will potentiate the publication of the results in the top robotics journals and conferences, and boost the impact of the research results.

(ii) It is possible to work European projects together as a joint project and provide opening job opportunities in the future.

Career development impact:

(i) The rich academic environment of the Department of Computing, which is part of the University of Bristol, is able to provide diverse classes.

École Polytechnique Fédérale de Lausanne (EPFL)

Laboratory of Intelligent Systems

Motion capture arena

SPARCdrone - SPARCdrone: safe, precise, agile, robust cargo drone for last-centimeter delivery

Wojciech Giernacki, Dariusz Horla, Marek Retinger

The proposed research concentrates on the use of machine learning algorithms for the measurable improvement in quality and safety of autonomous flights of cargo drones for last-centimeter (<5 km) person-to-person delivery.

Objective 1: Conducting extensive experimental tests in the Laboratory of Intelligent Systems with the use of in-flight real-time model-free minimum-seeking auto-tuning method (based on the golden-search algorithm) to obtain sets of optimal position controller parameters for the cargo drone; in further stage, a bank of controllers should be obtained to deploy safe, precise, agile and robust flight controllers in a presence of wind gusts based on selected cost functions,

Objective 2: The proposed research at the Laboratory of Intelligent Systems (using a wind tunnel) to optimize the autonomous landing process of the cargo drone, for various wind gusts conditions, to obtain minimum landing time or minimum energy effort solutions.

The proposed research will allow to develop new solutions for process optimization of auto-landing and safe, precise, agile and robust point-to-point flights of cargo drone for last-centimeter delivery. Solutions from the areas of control theory/machine intelligence/optimization techniques should potentially result in gaining new knowledge and extend state-of-the-art.

Imperial College London (IMPERIAL)

The Hamlyn Centre

Multi Terrain Flight Arena

Butterflies - Test-bed preparation for multi-source indoor hazardous environment to perform simultaneous localization inspired by multi-butterfly paradigm

Chakravarthi Jada

The main objective of this project is to prepare the hazardous Environment test-bed model in the indoor space and collect sensor data-set needed for the implementation of the Butterfly Mating Optimization (BMO) algorithm in 3D coordinate space. The hazardous environment by means includes, setting up of multiple fire and smoke sources (both static and dynamic) in the arena. The data shall be collected and apply the BMO paradigm to colocate the signal sources. This shall provide an opportunity to use multi-quad rotors or any mobile aerial swarm robots to localize the sources in real-time. The results obtained from this project implementation, may give the inspiration to co-locate the nuclear spills that appear suddenly in the nuclear plants, unwanted gas leakage location detection and immediate repairs in the industrial plants and oil spills latitude and longitudinal detection in the ocean and many other direct real-time applications.

We are expecting that after the test-bed preparation and experiments planned, they would have a huge impact on existing simultaneous signal sources identification problems and the methods that are following at present. The test-bed readiness and the simulation results of the BMO paradigm would lead us to the better problem formulation, step-wise procedure in the full-scale real time environment. Most importantly, these experiments will lead us to use aerial vehicles like quad rotors while roaming in the extreme heat, humidity or smog situations and challenges that are involved. At the outset, these experiments can have high impact on the existing un-touched hazardous situations where humans cannot enter such as forest fire-accidents, nuclear plant spills and repair, dynamic oil leakages in the mid-sea, mid-air traffic management etc. These indoor environments and experiments of detection will show a pathway for dealing with all the above and many other extreme scenario scientific problems.

Imperial College London (IMPERIAL)

The Hamlyn Centre

Multi Terrain Flight Arena

TUNER - Impact absorbing, lightweight metamaterials with tunable stiffness and density for robotics

Ozge Akbulut

Robots, in general, could benefit from components that will improve their survival skills. Impact-absorbing components can potentially increase the durability and safety of robots, and contribute to the cost-effectiveness of robotic systems. In this project, we aim at designing and fabricating a series of porous composite, elastomeric parts that i) could be triggered to decrease their density and ii) change their shape and stiffness with the application of pressure and vacuum.

Soft robotics is at its infancy with a steep growth rate; materials solutions along with smart mechanical design can generate light-weight, impact-resistant, and responsive aerial devices. Flying robots which are projected to be utilized in delivery, infrastructure maintenance and search & rescue need to be able to survive sudden changes in the environment and mitigate unexpected forces during collisions. Imparting shape and density-changing ability to robotic systems would enable reusable, cheaper, and robust off shore applications.

University of the West of England (UWE Bristol)

Bristol Robotics Laboratory

PAL Robotics Tiago

TR - Tiago Review

Mateusz Sadowski

The goal of the project is to get acquainted with the Tiago robotics platform and document it in a publicly available technical review of system architecture together with platform demonstrators showing the possibilities of the robot. A number of tests will be devised while working on the robot and data from each representative run will be logged in a form of a ROS bagfile so that it can be shared with the readers of the technical review and even reproduce some of the results based on logged data.

The foreseen impact is expected: • Working with a high end robotics platform will increase my chances of attracting customers interested in platforms similar to Tiago • The skills developed while working on the projects will be transferable to other robots I will work with in the future • Open technical review of the platform and its capabilities can be a good learning resource for Robotics Engineers and students pursuing robotics • By providing logged data the results will be “open for discussion”, anyone will be open to comment and contribute on them • This work can kick off the article section in “Weekly Robotics” newsletter and potentially an “Architecture of Open Source Robotics” book

University of the West of England (UWE Bristol)

Ambient Assisted Living Laboratory

Engineered Arts – SociBot mini

iRoniBot - Psychosocial Rehabilitation with a Humanoid Robot: Challenges and Perspectives

Maya Dimitrova

The main objective of the current project, is to perform a preliminary assessment of the potential of SociBot to be included as a co-therapist in psychosocial rehabilitation sessions.The model situation is counseling – a user seeks the help of a professional to cope with undesirable situations by learning new social skills and strategies and reformulation of their personal goals.

The robot assistant SociBot mini will be employed to help assess, communicate, reflect, remind, repeat and mirror personal experiences in order to enhance the process of self-controlled attitude shift – and support the effort of the therapist. Two scenarios will be prepared with SociBot mini playing the role of a cotherapist in a therapeutic session.

At the AALL only the scenarios will be developed and the videotaping of the robot will be performed, without any actual human-robot interaction study taking place. The study of saccadic eye movement and/or EEG during a counselling session with a humanoid robot will be performed at IR-BAS, Bulgaria.

Scientific: A clusterisation of robot behaviours will be proposed and their appropriateness for real life implementation in pedagogical and psychosocial rehabilitation settings will be tested. The clusterisation will be along psychological dimensions, implementable in the respective “social sensors”.

Societal: All knowledge and skills learned will be share with colleagues at IR-BAS. Future collaboration on joint projects or IR-BAS and AALL is also expected. IR-BAS has close connections, via the PR Office of the Bulgarian Academy of Sciences, with central and local media on the recent achievements in robotics with high societal value, www.ir.bas.bg. The institute is very well represented in the social media and will popularize TeRRINet with all available means.

University of the West of England (UWE Bristol)

Robotics Innovation Facility

KUKA KR60-3

RoboLayMan - Research of Layer Manufacturing of Polyurethane Foam Using Industrial Robot

Tadeusz Mikolajczyk

Layer manufacturing (LM) is the unique and the fastest technology of creation the models of any object that can be later released to consumer market. LM is carried out using special prototyping machines that are capable of creating rapid prototyping models very fast from several hours to several days, depending on the complexity of the object produced. Because of industrial robots are special kind of NC machines they are used equipped in tool for machining and too are used to some LM processes. An idea of proposed project is use of industrial robot for LM with original proposed by author method. For especially produce of large size model was proposed LM process using polyurethane foam. Robots are sometimes used in layer manufacturing processes, especially using the FDM method, ceramic materials or welding methods. Author used of this method with own original injection system using of IRb60 robot.

Because of proposed method of LM can build model with low gravity material, this technique is especially convenient for the large-size models formation. This is a new idea of layer large scale models. To implementation this method need using of robot equipped with PC controlled polyurethane foam applicator. On the basis of preliminary tests identified the possibility of using this original technique to rapid prototyping. The application of the proposed method in the RoboLayMan project requires the development and construction of a special controlled applicator combined with an industrial robot control system. The results of the tests allow for the preliminary development of the conditions for the application of the new technique of incremental shaping, convenient for shaping large-size models. For first stage of application will be used one-composed foam. This could have wide application in many areas. It is appropriate to apply this technique, especially with chemically hardened polyurethane.

Imperial College London (IMPERIAL)

The Hamlyn Centre

Robotic Fast Prototyping Platform

RoboFoot - Research of Friction Between Parts of 3D Printed Innovative Walking Robot

Tadeusz Mikolajczyk

Authors invented original bipedal walking robots (BWR) with rotate foot. Was presented two solution of real model of 3DOF BWR, and 4DOF BWR. These robots was build from parts made by 3D printing and driven by gears also printed. Friction process occurs in places of cooperation this mechanisms. Friction issues of elements made with 3D printing technique are of interest to scientists. In literature show research on tribological machine of 3D printed surfaces required tribological properties, such as friction coefficient and wear. Presented results show that 3D-prints parts from soft materials can indeed be used as fully functional driving wheels on a smooth surface, such as an aluminum rail, as well as in other applications where high friction is desirable. The purpose of the work is to learn about the impact of both 3D printing methods and materials on the friction conditions of real product on example of BWR.

The work carried out under the RoboFoot project enables the understanding of the technology 3D printed parts bon cooperation in BWR models. These models include both elements cooperating with a sliding motion (robot legs moving vertically) and gears for the drive of the legs or rotary feet. The developed structure may contain cooperating elements made with the FDM technique (e.g. ABS or PLA), such as plastic elements cooperating with metal surfaces. The layer manufacturing technique allows both the production of components for the assembly of devices and the production of devices composed of elements, in this case BWR models, which, after being equipped with drives and a control system, can be started. The quality of cooperation can be assessed through the evaluation of the drive energy indicators as well as model tests of the force and friction coefficient. The project will enable the development of technology for the implementation of BWR models. The results of the work carried out can be generalized and used in other applications.

Technical University Munich (TUM)

Robotics and Embedded Systems

Cooperative Robotic Manufacturing Station

HRCLSCC - Human-Robot Cooperative Loading Sacks with Contact Changes

Ciprian Ion Rizescu

Robots are increasingly integrated into workplaces, with a significant impact on organizational structures and processes as well as by affecting the products and services created by these companies. While robots promise significant benefits for organizations, their introduction presents a variety of design challenges. In this paper, it is proposed the creation of an operator-robot team to perform a complete packing operation. The concept involves an industrial robots team, operator and the industrial environment.

During the project the following tasks are to be considered:

1.Creation of the industrial robots team;

2. Operator co-operation as team leader;

3. Robot team learning with cooperation;

4. Carrying out the packing

5. Dissemination of all information and case studies concerning cooperation between operator and robots team.

The scientific results of the project are:

• Theoretical and experimental research, development of the knowledge base of the advanced robotics as well as the methods of testing, measuring and checking the subassemblies of the prehension devices;

• creation of a team of robots to cooperate in the packing operation coordinated by a human operator;

• the possibility of adapting the automatic packing line to any kind of material for the sack and for different sizes;

Universitat Politècnica de Catalunya (UPC)

IRI

Tibi and Dabo robots

PNP4HRI - Planning and plan execution in human-robot interaction

Luca Locchi

The main objective of the project is integrating a high-level planning and plan execution framework, Petri Net Plans (PNP), with perception and navigation functionalities of a robot involved in human-root interaction (HRI) tasks.

PNP is developed at DIAG Dept. Sapienza University of Rome (also deployed in the AI4EU platform) and the main objective of this project is to show its integration with functionalities for HRI tasks are developed at Universitat Politècnica de Catalunya - Institut de Robòtica i Informàtica Industrial (IRI) (also deployed in the AI4EU platform).

The integrated system will provide for high-level specification of goals and plans and will show improved robustness to complex situations arising from unpredictability of HRI tasks.

Work in progress (remote collaboration):

Researchers at DIAG and at IRI have worked together so far to: 1) define use cases where integration of PNP with HRI tasks are interesting, 2) analyze the individual components developed by the two partners and in particular the versions deployed in the AI4EU platform, 3) integration of AI4EU components.

Future work (physical visit(*)):

Complete and test the integration of the components during a physical visit of a student from Sapienza at IRI, experiments on IRI robots, and writing of a report (possibly a scientific paper) illustrating the work.

Expected impacts:

1) Tutorial on integrating planning and HRI components (in particular AI4EU components), 2) video showing implementation and execution of the use cases, 3) technical report / scientific paper describing the approach and the result.

(*) when possible according to COVID-19 restrictions.

Universidad de Sevilla (USE)

Robotics, Vision and Control Group

AMUSE

ESLAM - A lightweight and realtime SLAM for UAVs based on Event cameras

Sherif A.S. Mohamed

Extract data by mounting our DAVIS camera to the AMUSE platform and perform different routs and scenarios. We expect to work on the extracted data to complete one of our current work and publish the work on a peer-reviewed journal.