HomeRobotic Database - Single Infrastructure | TERRINet

Address: C/ Llorens i Artigas 4-6,

08028 Barcelona, Spain

Website

http://www.iri.upc.edu/

Scientific Responsible

Alberto Sanfeliu

Antoni Grau-Saldes

About

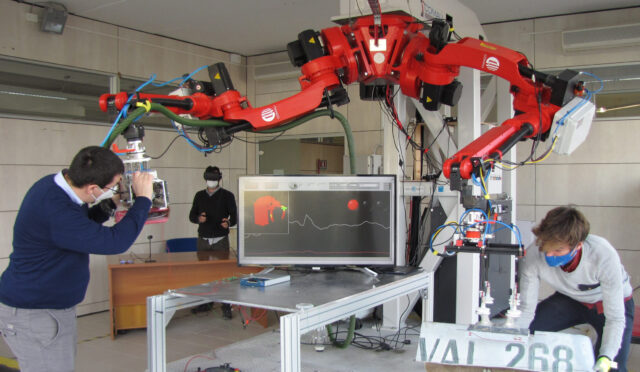

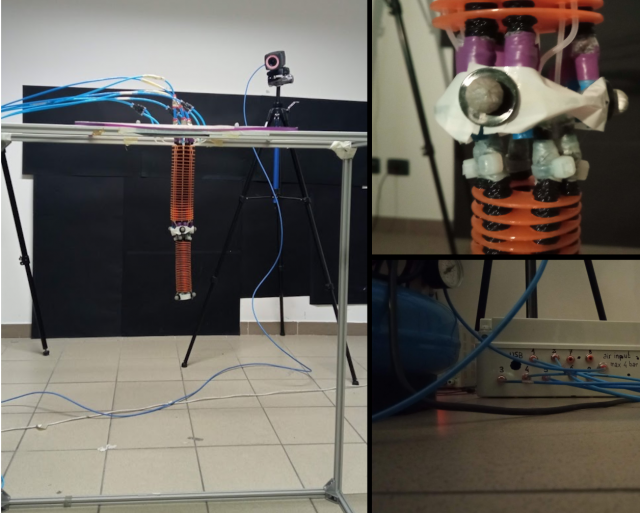

The Institut de Robòtica i Informàtica Industrial (IRI), is a Joint University Research Institute participated by the Spanish Council for Scientific Research (CSIC) and the Technical University of Catalonia (UPC) that conducts basic and applied research in human-centered robotics and automatic control.

The IRI offers access to scientific-technological areas included in the Barcelona Robot Lab (BRL) (http://www.iri.upc.edu/research/webprojects/barcelonarobotlab/) for doing outdoor/indoor experiments in mobile robotics and human-robot interaction and collaboration. The BRL includes an outdoor pedestrian area of 10.000sqm in the north UPC campus and an indoor area of 80sqm with Optitrack installation. The BRL allows to experiment in public spaces deploying robots in a real and controlled urban scenarios to perform navigation and human robot interaction and collaboration experiments with end users and participants. The Barcelona Robot Lab offers moreover a simulation software package with a 2D/3D scenario view, where any experiment can be validated before doing real-life experiments, and technical support along the experimentation process.

Presentation of platforms

Available platforms

Barcelona Robot Lab

Outdoor pedestrian area of 10.000sqm in the UPC nord campus, provided with fixed cameras, wifi, 3G/4G and partial gps coverage, with presence of buildings, open and covered areas, ramps and some vegetation. Several public space scenarios could be developed in this area, as markets, bars or shops aiming to deploy robots in a real and controlled urban scenario to perform navigation and human robot interaction and collaboration experiments for multiple applications.

Optitrack indoor testbed

Indoor testbed based on an indoor positioning system that uses 20 Optitrack Flex13 infrared cameras. This system can calculate the position and orientation of moving objects, previously tagged with special markers, within the volume of the testbed (11x7x2.5 m) in real time (with an update rate of up to 120 Hz).

Tibi and Dabo robots

Tibi and Dabo are two mobile urban service robots aimed to perform navigation and human robot interaction tasks. Navigation is based on the differential Segway RMP200 platform, able to work in balancing mode, which is useful to overcome low slope ramps. Two 2D horizontal laser range sensors allow obstacle detection and localization. Human robot interaction is achieved with two 2 degrees of freedom (dof) arms, a 3 dof head with some face expressions, a stereo camera, text-to-speech software and a touch screen. They can be used to provide information, guiding and steward services to persons in urban spaces, either alone or both in collaboration.

Teo robot

Teo is a robot aimed to perform 2D Simultaneous Localization And Mapping (SLAM) and 3D mapping. Is designed to work both in indoor and rugged outdoor areas. To perform these tasks, the robot is build on a skid steer Segway RMP400 platform and provides two 2D horizontal laser range sensors, an inertial sensor (IMU), a GNSS receiver and a homemade 3D sensor based on a rotative 2D vertical laser range sensor.

IVO

IVO is a mobile urban service robot aimed to perform navigation, manipulation and human robot interaction tasks. Navigation is based on a 4 wheel skid steering platform, a front 3D lidar and depth camera and rear 2d lidar. Manipulation is based on two 7 DoF arms with wrist torque force sensors, a 1 DoF gripper and 3 DoF hand-like gripper.It\'s also equipped with a pan and tilt head with a depth camera, microphone and speakers with Tex-To-Speech software and a touchscreen.

Ana and Helena Pioneer robots

Mobile urban service robot aimed to perform navigation, human robot interaction and package delivery tasks. Navigation is based on the skid steer Pioneer 3AT platform, with a 3D lidar and stereo camera for obstacle detection. Human robot interaction is based on a pan and tilt camera, status feedback lights, text-to-speech software, a microphone and a touch screen.